Every day, governments generate massive amounts of data, spanning everything from birth certificates to weather data. Effectively managing and sharing that data can deliver a wide range of benefits to governments, the private sector, academia, and — crucially — to people.

Governments embark on building and improving the systems that enable data exchange for a few main reasons – namely to improve the delivery and scope of services, increase the operational efficiency of government processes, and provide an ecosystem that supports economic development.

In turn, achieving these goals can result in positive outcomes, such as strengthening access to – and quality of – services for people, stimulating innovation and new business models, and improving transparency and accountability.

But, to realize these benefits for people and communities, good data exchange systems are key.

To support governments, development actors, and policymakers in these efforts, we have used a technical lens to outline some of the foundational components – and attributes – that make up effective, trusted national data exchange systems.

Data’s value is far-reaching – spanning organizations, sectors, and communities.

Getting national data exchange systems right starts with understanding data — how it is produced, transported, and consumed.

As a key component of today’s digital world, data is an infinitely shareable – and reusable – resource. The same data may be used in multiple contexts, for different purposes, to provide a variety of insights and solutions.

For example, a single government agency may provide information to thousands of different organizations and individuals in the form of:

- Identity data — providing access to and for the delivery of services, as well as promoting more efficiency across government services.

- Health data — advancing better health outcomes for individual citizens, as well as more effective health crisis response.

- Weather data — supporting better drought prediction, response, and remediation.

These examples illustrate just a few of the many ways that different government institutions can leverage data to provide outsized value – and benefits — to people and society.

But responsibly sharing data is no easy feat. National data exchange systems must be thoughtfully designed, implemented, and governed.

National data exchange systems are complex, especially with the wide range of different data types created by governments. Key questions that need to be answered include:

- What type of data is being produced?

- Who is producing the data?

- Where and how is the data being stored?

- How does the data consumer know where to find the data (producer)?

- How does the data consumer request this data?

- How does the data producer validate that the request is valid?

- How is the data moved from the data producer to the data requestor?

Each of these questions may have multiple answers. National data exchange systems will be able to handle the multiple scenarios these answers represent.

Correctly balancing the required attributes with each unique scenario is key to designing, implementing, and governing effective data exchange systems that meet the needs of all stakeholders.

Along the way, those building data exchange systems are faced with a multitude of different challenges. These range from dealing with the integration of existing systems, the myriad of diverse types of data, security issues, and navigating the policy, regulatory, and political realities of data management and control — which manifest in the design and operation of data exchange systems.

What are the foundational components of national data exchange systems?

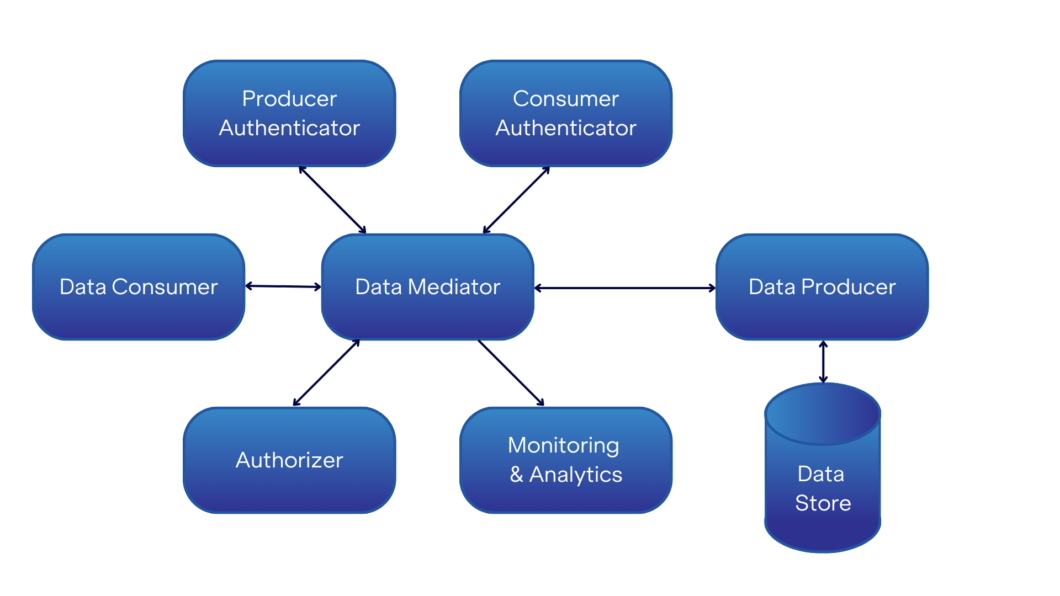

Data exchange systems are diverse. Multiple approaches and techniques may be required to cover all the different types of data that these systems must support as part of a national data strategy. Even with their differences, these varied approaches will generally have the following abstracted components:

- Data producer — the entity that creates the data.

Example: Medical facility - Data store — where the data producer stores the data.

Example: Database of medical records - Data consumer — the entity that wants to use the data.

Example: Doctor - Authorizer — the process that determines if the data consumer is authorized to access the specific requested data from the data producer.

Example: Access rules - Producer authenticator — the process that verifies the data producer is who they purport to be.

Example: Security Server - Monitoring and analytics — the tools that monitor and analyze the performance of the data exchange system.

Example: Administrative dashboard

In addition to each of these components, some data exchange system designs also make use of:

- Consumer authenticator — the process that verifies the data consumer is who they purport to be.

Example: Identity Management System (IAM) - Data mediator — a system that sits between the data consumer and the data producer that acts as a proxy or middleman.

Example: Data aggregator

To meet the requirements for specific scenarios or situations, these components can be arranged in different configurations. While the different variations are too numerous to fully explore here, the diagram below provides one possible approach using a data mediator.

In this approach, the data mediator is used to validate all requests and responses to ensure that:

- The data consumer and data producer are both who they say they are.

- The data consumer is authorized to make the request.

In fact, in this approach, the data mediator is central to the process. The data consumer contacts the data mediator with a request. The data mediator then handles almost the entirety of the rest of the transaction, gathering the requested data from the data provider and handling the necessary authentication and authorization processes along the way, before delivering the requested data to the data consumer. Other approaches divide these responsibilities up differently.

In this design, the data consumer does not necessarily know who the data producer is. Along the same lines, the identity of the data consumer may be hidden from the data producer. In some scenarios, this may be a desirable feature. For example, in a digital identity context, you may not want the identity issuer (the data provider) to know where the person was using their identity, just whether it was valid.

Below are three common approaches used in certain data exchange scenarios. Each has strengths and weaknesses, and none are perfect in all situations. Most data exchange systems will make use of more than one of the approaches outlined here.

Open access

The open access approach is the most permissive and is used for public data. In these systems, generally anyone and everyone is authorized to request and receive the data provided. Often, the only technical restrictions enforced are rate limits, which control how much and how fast data can be requested.

Each open access system will have its own methods for making data available, with varying levels of programmatic access for automated processes.

There are many examples of open access systems around the world, including Colombia’s Sinergia indicator tracker and World Bank Open Data.

API gateway

The API gateway approach is designed with more robust access control, making it a good match for allowing third-party (e.g., commercial, or other non-governmental) access to controlled data.

In this model, the gateway sits between the data consumer and the data provider. The data consumer is required to research the available services and request requirements (a process called “discovery”) before making a request. The gateway then manages the authentication and authorization of the request, as well as other security and monitoring, forwarding valid requests to the data provider. Data is returned to the data consumer via the gateway.

Data exchange systems that use an API gateway approach may have one or many API gateways. The data consumer is responsible for knowing which API gateway to connect to, as well as establishing a relationship with the API gateway that would allow for data requests.

Singapore’s APEX Cloud and Uganda’s UGhub Systems and Data Integration Platform are each an example of the API gateway approach.

Secure integrated platform

Some other approaches are even more prescriptive, maintaining greater centralized control over more of the components. These approaches can work well when there is complete policy control over all the participating systems (e.g., a unified government ICT approach).

In this model, data consumers and data producers are more tightly bound to the platform, which handles all (or most) of the tasks needed for the data request to be made and fulfilled. More formal methods are generally used to onboard both data consumers and data providers onto the platform. This approach tends to work best when just a single instance of the platform is being used.

Examples of this approach include Estonia’s X-Road and Rwanda’s Enterprise Architecture Framework (RGEA).

What are some of the key attributes of data exchange systems?

Successful data exchange systems are significantly more than just the technology on which they are built. At the end of the day, how the resulting information is used to impact the lives of the people is crucial. Several attributes are central to ensuring these systems are safe, efficient, and widely adopted.

Trust

Everything starts here. Trust is a multi-faceted concept — taking time and effort to build. Some of the trust facets needed for a successful system include:

- Parties can trust that the other party is who they say they are.

- The data producer can trust that the data consumer has a legitimate right to access the requested data.

- The data consumer can trust that the received data is correct and that it has not been tampered with.

- The data producer can trust that the data consumer has been properly vetted for access.

- The data producer can trust that the data will only be used for authorized uses.

- Trust that sensitive data (e.g., personally identifiable information (PII), personal health information (PHI), or financial information) is properly protected.

- Trust that privacy and other data protections are in place and enforced.

Trust relationships exist either formally or informally between all participants within the data exchange process. Policy, regulation, and enforcement — when used in conjunction with technology — are key to building and maintaining these trust relationships.

Governments around the world are faced with the problems created by low trust from the populace. This sometimes manifests as low uptake of new systems or even actions taken in court, as we have seen in several countries in the last few years. The importance of this trust within this context can be summed up by the following statement from the United States Department of Homeland Security:

Trust is a key element of electronic transactions between natural persons and non-person entities, such as organizations and machines. Ensuring the source, confidentiality, integrity, and availability of data is critical to our ability to transact with trust and maintain privacy across interconnected services, devices, and users. The proliferation of online services and cloud computing are enabling new operational efficiencies, while simultaneously creating novel risks.

Even though the statement is talking about transactions between natural persons and non-person entities, the same principles apply for any combination thereof. In the case of data exchange, non-person entity to non-person entity transactions are also common, but still need the benefits that trust provides.

While time-consuming to build, trust can easily be lost. The right tools and transparency are important to fostering an environment where trust can be successfully built. One such example of tools and transparency going hand in hand is Belgium’s MyData portal, which allows people to see who has accessed their information in federal data sources over the last 12 months (excepting judicial and law enforcement requests). This effort is part of a multi-stage initiative to improve transparency and increase trust.

Security

Directly underpinning trust, security plays a significant role in the success of integrated national data exchange systems. Security must be present at all levels and with all stakeholders that interact with the data exchange systems. A successful architecture design will address security issues such as:

- Encryption at rest — Data needs to be protected at every step in the process. Encryption at rest means the data is encrypted when not in use (i.e., being processed), such as when it is sitting on a hard disk or otherwise archived.

- End-to-end encryption — While in transit, data can be subjected to myriad attacks such as eavesdropping or a man-in-the-middle. End-to-end encryption helps address this by the data never being encrypted while in transit. The sender encrypts it, and only the final recipient can decrypt it.

- Levels of assurance — The greater the importance or risk of a particular exchange of data, the greater the desire to know that the other party is who they claim to be. Levels of assurance formalize what steps need to be taken to obtain a certain level of trust.

- Authentication methods — The three most common ways of proving your identity are 1. something you know (e.g., a password), 2. something you have (e.g., an ID card), and 3. something you are (e.g., biometrics). The application of these — individually or in combination — describes the process used to authenticate an identity.

- Physical security — The effectiveness of software and hardware security approaches can be diminished if they are not kept in a secure location or are otherwise protected physically.

- Least privilege rule — With regard to security (whether physical or virtual), people should only have the minimum level of access necessary to fulfil their role. For example, not everyone that works at a bank should have access to the bank vault.

- Data minimization — With physical documents, all data in the document was transferred, even when only part of it was needed. Digital approaches can remove the need for this practice, replacing it with one where only the minimum needed data is exchanged, also known as data minimization.

The type of data and the context it is being used within will determine the relative importance of these, along with how they are implemented. In any case, consideration must be made for each. Many government data stores contain information strongly desired by hackers and other bad actors. Once again, policy, regulation, and enforcement play a key role in maintaining proper security protocols to minimize the risk of holding this data.

All countries are striving to have sufficient security as they engage in an arms race against bad actors. One example of this can be seen in Singapore’s SingPass, which allows the sharing of citizens’ personal data for different services. SingPass supports multifactor verification utilizing both biometrics and SMS 2FA — thereby providing choice to their citizens while still pushing for better security.

Interoperability

A single data provider may supply data to many data consumers. A single data consumer may request data from multiple data providers. Because of the potential complexity, development costs, and maintenance costs, it can be unfeasible for each of these interactions to have a bespoke process for exchanging data. That would be analogous to having a different method of placing a phone call for each person one was trying to contact.

Standardization is key.

There are multiple aspects of a data exchange system that must be standardized for a successful exchange. For example, the transport layer, method of presenting one’s identity, acceptable data formats, and the data required to make a request all need to be understood and agreed upon by all participants.

When all the necessary parts are standardized, we can achieve interoperability. If using shared standards, interoperability can often be extended to allow two or more data exchange systems to share data.

The use of standards (preferably open standards) supports the standardization of the various aspects of interoperability, which can lead to multiple benefits.

On the international stage, cross-border interoperability can still be difficult to realize. Efforts such as the EU’s GDPR laws provide useful examples of the strengths and weaknesses of these approaches. As noted, standards provided by organizations such as ISO, WC3, NIST, and ITU can be beneficial in achieving better interoperability on one level. Industry specific standards, such as HL7 FHIR for medical records, also play a vital role.

Good national data exchange systems will try to balance all the interoperability needs of the different stakeholders. Through an iterative process, interoperability should be improved over time.

Consent

The basic idea of consent is that data should only be received and used by the data consumer with proper authorization. A much more complicated set of questions is exactly who provides what authorization for what types of usage through which means. The answer to this depends on the scope of data covered by the data exchange system. For example, an open access system’s owner may allow any user to use the data for any purposes, while a system dealing with medical or financial records will be much more restrictive.

When talking about consent, the most common paradigm is dealing with data about a person, especially sensitive data (health, financial, movement, etc.). Under most data protection laws, the individual the data is about (the subject) is the source of the authorization to use this data. For the data consumer to receive and use the data from the data provider, they need permission from the subject.

This becomes further complicated in that not all subjects can give consent (e.g., minors). In some circumstances, consent may be given through other legal or judicial means without the subject’s knowledge (e.g., a court-ordered search warrant). There can also be questions as to whether the consent was freely given with a full understanding of the ramifications of that action (meaningful, informed consent). The system’s design impacts all of these.

The intricacies of consent go beyond the scope of this discussion, but consent — both as a concept and a mechanism — remains integral to a data exchange system’s architecture design.

For example, Australia’s Data Exchange sets specific guidelines about when and how consent is to be obtained, including providing training materials for organizations that need to obtain consent. This proactive approach can help governmental organizations appropriately navigate the obtaining of consent though ethical and inclusive means.

Working through the complexities of deploying consent mechanisms is a process. Examples such as India’s Account Aggregator approach can highlight many of the facets that will need to be addressed by policymakers and implementors.

What policy considerations inform the technical architecture of national data exchange systems?

When it comes to national data exchange systems, policy decisions oftentimes drive technical architecture decisions. These policies can be about any of the subjects previously discussed, as well as myriad others related to the specific context. These decisions will define the boundaries within which the technical architecture must reside, such as:

- Use cases — A clear articulation of who is intended to use the system and for what purposes, with an eye both to current and future expectations.

- Target outcomes — A clear articulation of what success looks like and the metrics and indicators that will be needed to evaluate that success.

- Governance — The plan for both technical and policy management and oversight of the system.

- Data ownership — Who has control over the diverse types of data within the system.

- Models — the architecture and policy design choices affecting how data is stored, delivered, and shared.

- Capacity — The ability for the data exchange system to scale with usage both in terms of technical capacity and governance efficiency.

- Licensing — The acceptable licensing models and agreements for data exchange software and hardware.

- Hosting — Where the technical equipment used for the data exchange is held and maintained.

There are many additional considerations beyond the examples listed here, and overlap does exist across considerations. They can generally be placed into one of four buckets:

- What is the purpose of the system?

- Who is going to use it for what purposes?

- What constraints do we have?

- What does success look like, and how do we measure it?

While 100% clarity may not be possible, policymakers should strive to be as close as possible to this. Policy changes that necessitate a change in a system’s architecture design can be expensive, especially the further along a project is. In some cases, certain changes may not be possible with the existing design.

To support policymakers in these efforts, mapping the current data ecosystem is important. This can highlight strengths and weaknesses in the current approaches and allow for more targeted efforts to address the greatest needs, both in terms of technology and policy.

Where to next?

There are many facets to good technical architecture design that make up national data exchange systems. We have outlined some of the foundational aspects, but there is much more to learn and consider.

With that said, every situation is unique. Taking the principles provided here, and with a focus on the needs and outcomes of people, can help set the pathway towards the right architecture and, ultimately, the right solution.

Over time, the data exchange systems will be modified, upgraded, and even re-worked. This is a natural progression as the data exchange system evolves and grows to meet the ever-shifting needs of the people and other stakeholders.

Along the way, the decisions made – whether during design, implementation, or even redesign – will impact the uptake and adoption of the data exchange systems. Ensuring users buy-in, trust, and ultimately use the data exchange systems will be essential to their success. To learn more, read our research paper that highlights key insights on implementing integrated national data exchange systems.

We also encourage you to dive into our pool of resources that explores some of the ways data can be responsibly unlocked to drive shared benefits for governments, the private sector, and people – for example:

- Case studies that examine the differing approaches to integrated national data exchange systems in Bangladesh, Uganda, Rwanda, and Ghana.

- Primer on some of the emerging models that can effectively capture, manage, and share data.

- Spotlight papers that look into how data can be responsibly shared for climate response, such as through data trusts and open transaction networks.

- Expert comment on Africa’s digital transformation journey and the role of citizen portals within trusted data exchange systems.

Over the coming months, we will publish additional resources that dive deeper into some of the key decisions, tradeoffs, and resulting implications of technical design, implementation and governance decisions around data exchange systems.